XR development has come a long way in recent years mostly because game engines are getting easier to learn and easier to use. This is important to L&D professionals building XR (i.e., augmented reality, mixed reality, virtual reality, 360-degree video) eLearning experiences because the development cycle is decreasing as designers can now participate in the process without needing to code. In this article, we explore one upcoming new feature of the Unity game engine, what it does, and why it’s important to L&D professionals.

A high-level understanding of Unity MARS and AR Foundation

We recently learned about one of Unity’s upcoming features, the Mixed and Augmented Reality Studio (MARS), which is used in conjunction with AR Foundation, a recently launched Unity tool. We’ve already seen that these two features combine into a powerful toolset for AR developers, though we’re admittedly still learning how to use them both. Note that MARS is still in beta testing and has not been released to the general public yet.

There’s a lot of technical information about AR Foundation in Unity, and virtually no information about MARS anywhere. Here’s how we best understand them both from a layperson’s point of view. AR Foundation is Unity’s tool for deploying an AR project to any major platform. This means you can build your AR experience one time and deploy it to all AR-capable devices at once, such as an iOS mobile device, an Android mobile device, a Microsoft Hololens head-mounted display (HMD), or a Magic Leap HMD. MARS was developed to work in coordination with AR Foundation, vastly improving the speed and ease of development.

One of the most challenging and time-consuming aspects of developing an AR experience is building virtual elements into your real-world. As Charles Migos, global director of design at Unity said in a MARS presentation at Unite Copenhagen 2019, “Getting real world data into a Unity project is a difficult process…Iteration cycles were too long, and required semi-complete code to test.” This typically means writing nearly complete code, prototyping and testing in both the real and virtual worlds, and then revising code and repeating the whole process ad infinitum.

MARS gives designers and developers the flexibility to more easily create and manipulate the world they wish to use for their AR experiences. You can use your mobile device’s existing sensors, cameras, and data to capture an accurate representation of the real world around you and import this information directly into your Unity project in real-time. MARS then quickly converts this real-world data into a virtual world that you can immediately use for your AR eLearning experience. You can think of MARS as the inputs for your XR experiences and AR Foundation as the outputs for those same experiences.

The power of MARS Simulation View

As part of MARS, there’s a real-time editor called the Simulation View that provides a what-you-see-is-what-you-get (WYSIWYG) development interface. In the Simulation View you can make changes to where you place objects and how those objects interact with the real world. You still have the flexibility to create an even more detailed eLearning experience with custom coding scripts.

Because MARS is built on top of AR Foundation, it supports horizontal and vertical surface plane detection. Plane detection means that your AR eLearning experience can see where a surface, horizontal or vertical, exists in the created world. You can give your objects parameters, for example, when to appear, what type, height, or size of surface to appear on. Then, if a player’s AR device detects surfaces with matching characteristics (i.e., height, size, shape, location, etc.), then your objects will automatically appear for the player. You can even test whether these parameters will work in the real world using the Simulation View.

This is important because it saves you time in prototyping. In other words, you can now do this instantaneously using the Simulation View. As the Unity website says, the simulation view “window includes tools and user interface to see, prototype, test, and visualize robust AR apps as they will run in the real world.” Or, as Andrew Maneri, data + content lead, MARS at Unity, said at Unite Copenhagen 2019, „The goal of MARS is to get the iteration loop as small as possible at every stage of development.“

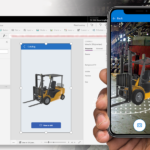

Refer to Figure 1 for an example of prototyping an AR experience in real-time. In this example, the AR device sensed the data of an office environment, sending the data to Unity via MARS. Then, using asset parameters previously created in AR Foundation (i.e., plane detection), Unity was able to immediately turn the desktop into a virtual jungle and place a character on it for the player to view in the AR device. The player controlled the character, moving her around the space, through the desktop jungle, down to the floor, etc.

Building the Mirrorworld

Tools such as AR Foundation and MARS enable us to more easily build what Kevin Kelly calls the Mirrorworld. In this future Mirrorworld, every physical person, place, or thing has a virtual equivalent. This will not only enable humans to sense, understand, and interact with information more effectively, it will allow computers to sense, understand, and interact with the physical world. Or, as Timoni West, director of XR, Labs at Unity, said at Unite Copenhagen 2019, with MARS “(W)e can really move into this area of distributed computing where computers do what we actually want them to do,” and that Unity is providing “the tools for you to take advantage of it.” Unity MARS and AR Foundation are powerful tools for L&D professionals to learn, enabling us to quickly and more easily create XR learning experiences and deploy them across all XR platforms.

Quelle:

https://learningsolutionsmag.com/articles/fast-multi-platform-ar-development-using-unity-mars