Overview

This is an abbreviated laundry list of common widgets for 3D interfaces collected from a variety of sources around the net.

What this post is not about

Topics this post is NOT about: I’m going to skip over concepts around usability, ideas around arranging space, aesthetics, user navigation, user control or voice input, gestures, gaze. I’m ignoring 2d buttons, 2d widgets, spinners, progress bars. I’m also skipping over implementation to some degree (although mentally I’m targeting WebXR for FireFox Reality on HMDS with 6dof controllers). I’m glossing over data visualization. I’m glossing over the larger topic of design principles — on that here’s a good primer on an implementation approach using AFrame. If you want to look into design more deeply there are many manyguides . See also Spatial, Neilson Norman Group, Intel, Valve, Unreal, Microsoft, Leap Motion, Magic Leap and Google:

Where ideas were lifted from

For this compilation, ideas are lifted from toolkits such as ThreeJS, AFrame, VRTK, ReactVR, Unity3D, Hololens, Leap Motion and the Magic Leap. There is also extensive documentation on 3D interfaces in books such as “3D User Interfaces Theory and Practice”. Also see UXofVR. There have been many development shops historically studying 3d interface ideas such as Oblong, Holographic Interfaces. There are many individual voices including Jacob Payne, Timoni West and Kharsis O’Connell. The video game industry also has many “in game” 3D object interactions and UX going back about 20 years. There’s a longer technical history stemming back to work 50 years ago by Ivan Sutherland. There is also a pre-technical history of interior design, object placement, signage and real world buttons, levers and interactions that form a skeuomorphic design language underneath digital interfaces. Finally, work is ongoing — see the IEEE VR 2019 conference contest on 3D UI for example: http://ieeevr.org/2019/program/3dui.html .

The List

1. Objects in 3d

First we note a common core for all 3d objects. Objects have color, place, size, may be visible, animated, may or may not support interaction. They may or may not have collision hulls, they may or may not propagate events, they may or may not have behaviors. Typical expectations include:

- Absolute or relative position, orientation, scale, style, color, transparency, texture, border.

- Priority or importance (for cases when culling features).

- Visibility; which can depend on context and total volume of visible objects and or the importance of the object (sometimes objects should remain visible even if they are technically occluded, or sometimes an object should become invisible simply because it’s obstructing something more important).

- Collision hulls, with filters/layers, reporting what events to which observers. For example VR drums may only collide with VR drumsticks but not with VR hands directly or perhaps VR buttons may not collide with VR buttons but may collide with VR hands.

- Non-diegetic versus “In World” (some features may be pinned to HMD).

- Contrast — for example a text-label may by default have a neutral backdrop to help text be visible against arbitrary backgrounds.

2. Semantic Hints

In immersive 3d environments a newish ideas of ‘semantic rules’ is emerging.

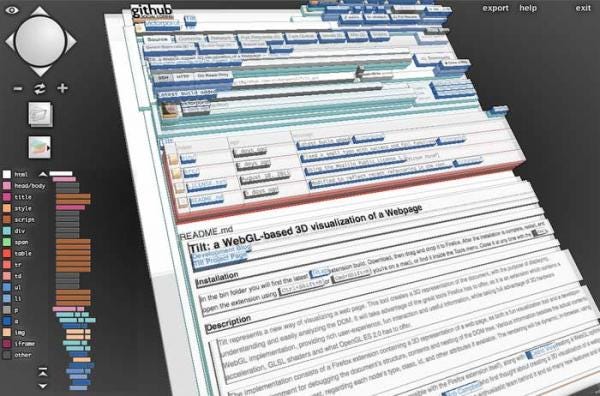

This maps to layout considerations we are familiar with in 2d. In 2d we are used to concepts such as having an HTML DOM element stick to the top of a document or be centered in the middle of the document — where page layout can vary but the intent remains constant.

I explore this a bit in my own work:

A VR/AR environment designer may think or believe they want to specify where an object is placed absolutely, but what they may in fact want is to specify an “intent”. The placement of an object or the behavior of an object is a function of the surroundings it is placed in. I’m referring to this idea as “semantic hints” and these may be codified as properties or flags on objects to reduce the burden on the designer. Here are kinds of things a designer may want to express:

- ‘be on ground’

- ‘be on a wall’

- ‘billboard’

- ‘tagalong’

- ‘support occlusion’

- ‘pinned to world’ (either by GPS or by a SLAM map or an AR Tag)

- ‘pinned to another object’

- ‘collidable/selectable’

- ‘has shadow’

- ‘perceptual size in field of view’

- ‘pointing at’ (an arrow may point at a subject)

- ‘stick to surfaces’

These qualities are common and if codified can provide flexible expression. They can make an AR experience more portable as well (a set of widgets inside of one SLAM map may need to be reconstituted inside another different SLAM map). For this reason I predict that there will emerge an HTML or CSS like declarative grammar for specification of intent. Hints can range from the simple to the complex and I don’t want to dwell too much on this but it’s worth noting this can also involve a machine learning component to be able to identify and partition space.

3. Labels, Text, Flat Images

I’m going to gloss over this a bit here and circle back a bit further on when I talk about Card based layouts.

If you look at say React or Google Material Design you’ll see fine grained distinctions between labels, text and so on (which I am not doing here).

This topic by itself is could be an entire career; simply dealing with anti-aliasing, fonts, kerning, layout, contrast, readability, language localization. Beyond literal letters thare are other grammars, musical notation and the like, and there are opportunities to use animation or color to increase the bandwidth of communication since the medium is animated rather than static like traditional paper. Most of what we’re going to be doing in VR is reading text, so it’s important to get it right.

4. 3D Geometry and Statue Animated Geometry

Objects may “look” rich and complex but they’re often just statue animations and don’t do much at all. However there’s still a lot that can be said with pure visuals even if not “interactive” in a deep sense. This can be used to convey all kinds of information, humor and the like. Whenever I’m auditing a video-game I will mentally replace in my head any visual animation with a grey box, to isolate what is real interaction from visual eye candy. But I want to acknowledge that there’s a role for visual appeal.

5. Manipulators

Manipulator widgets are common in authoring tools. These are buttons or widgets that appear on demand to help manipulate other objects. More recently in VR these are easier to write since you can directly move in 3D rather than in 2D. In a sense virtual hands are also manipulators but I treat those separately.

I’d arguably list navigation helpers as well here (not to focus on navigation which I want to avoid but to denote that there’s a visual UI helper here that acts as bridge between the person playing the experience and the machine understanding their intent):

6. Buttons, Radio Buttons, Sliders, Dials

These are skeuomorphic references to the age of 2D UX which provide control in a well understood manner with a low learning curve.

Oddly one would imagine there would be more collections available for AFrame or ThreeJS developers. I didn’t see a lot of resources here for basic buttons however. Here is what I did see:

- https://github.com/caseyyee/aframe-ui-widgets

- https://github.com/etiennepinchon/aframe-material

- https://blog.neondaylight.com/build-a-simple-web-vr-ui-with-a-frame-a17a2d5b484

- https://wirewhiz.com/aframe-button/

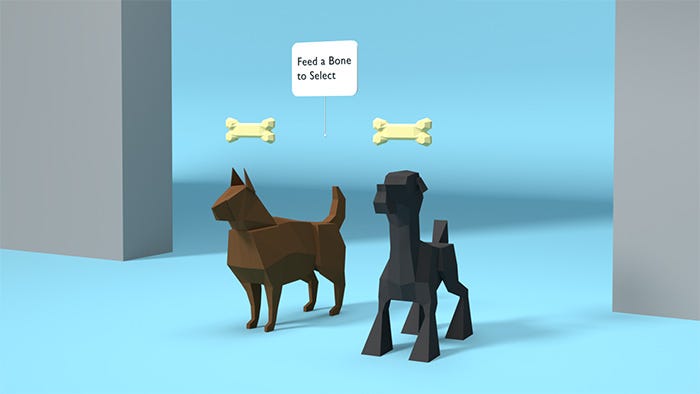

7. User Hints; sparkles, arrows, glows, navigational cues, animation

User Hints provide cues that an object is selectable, is a focus, is in-progress or is in in a certain position relative to the viewer.

Often hints may suggest that something is a button or is selectable in some way. I this case there is a combination of an explicit text label or panel, with explicitly highlighted geometry differentiated from other viewable elements.

Animation can also be a visual cue about selection or state change; notifying the user that something has relevance:

8. Hands, Faces, Bodies

Representation of embodiment are also themselves common VR objects and a form of ‘widget’ or control. Representation of hand controllers, Leap Motion representation of hands, Face Puppet representation of faces. The Microsoft Kinect comes to mind. Even drum sticks for manipulating Virtual Drums (such as Sound Stage VR) come to mind.

In my mind hands and the objects they manipulate melt into each other. The classic example is how a cane becomes an extension of a blind mans feel. So it may be best to think of these as gestalt widgets.

Faces in particular are hugely expressive tools that humans use — arguably expressing more bits per second than finger input via keyboard. Here’s an example of this being represented in a UI:

Here’s a typical body pose tracking using the Kinect. Note I’m less interested in the tracking itself and more interested in the representations of the body as a virtual handle or object in VR — and its interactions with other VR objects:

9. Keyboards, Pianos, Palettes

Interestingly a wide range of palettes are emerging — see VR editors. I distinguish these simply because they’re evolving rapidly and seem to deserve attention. These serve both as examples of what can be done and specifically as elements that could be included whole cloth in a library. In 2D we have dialogs, input widgets, panels and forms. The 3D equivalent can include 2D layouts but the novel feature that appears to be showing up in 3D is the Input Palette.

10. Cards

In 2D we have a pattern of a layout, occasionally driven by a schema, and populated by data. HTML defines a Document Object Model which can be used presentationally to represent data. In 3D an entire document can be considered a presentational widget for presenting a specific data object. A collection of similar data objects can be presented in the same way. Card like layouts are common in 2D and will also likely be common in 3D — but layouts won’t consume the entire page — instead they will be “widgets” themselves.

More specific than just an “HTML” layout in general — a card is as a representation of a category of subjects in a standardized way. For example a collection of cards representing possible vacation destinations to visit may also show the current weather conditions at those destinations.

11. Collections; lists, groups, folders, file systems

Common collection patterns are carried over from 2D but novel 3D layouts can be used. Often collected items have some kind of structured relationship to each other (precedence, priority, centrality) depending on the categorization scheme. Occasionally those collections are views or proxies for a remote datastore or filesystem.

12. Dataflow

Objects can pipe data to each other. This can be used to represent work over time or code.

13. Triggers, sensors, wires, timers

A common pattern for video games is a distinction between a play mode and a construction mode. There’s a hidden game grammar visible to the game designer which consists of collision hulls, regions or triggers that can fire events off, delay and split events and so on. In a sense these can be thought of as invisible buttons, or scripts with a spatial property or aspect.

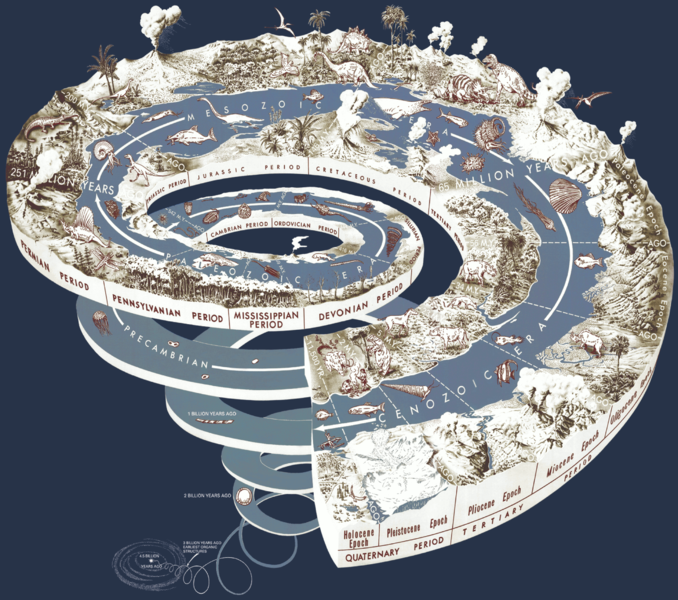

14. Globes, timelines, radar

There are a class of complex, but commonly desired widgets:

15. Fancy Objects

Fancy widgets can be part of an operator-in-the-loop training scenario:

In this realm there are full on simulations of industrial tree cutters, flight simulators and endless complex machinery. It’s safer to train on simulators, and in some cases the simulations can be provide telepresence, or human machine hybrid telepresence. These ‘tools’ become fully immersive environments or experiences, and are themselves made up out of many smaller pieces.

For low earth orbit space station assembly, or for decommissioning a nuclear reactor this may be the only way to put intelligent operators in the loop:

Closing comments

This was a quick review of widgets for VR/AR. We’re seeing many exciting new interaction patterns and the field is continuing to explode. It’s not yet clear that there are comprehensive widget libraries or toolkits that a novice can use today to knock out AAA experiences for a short term project or market. In future work I hope to provide some examples of this, probably using WebXR.

Quelle:

Foto: From 3D Design Patterns http://www.xr.design/tags/ui

https://arvrjourney.com/laundry-list-of-ux-patterns-in-vr-ar-24dae1e56c0a