Mithilfe digitaler Technologien versuchen Fachleute in Forschung, Pädagogik und ICT, das Lernen zu verbessern. AR und VR bieten neue Ansätze. Welche, das zeigten Fachleute an der Frühjahrstagung der Schweizerischen Stiftung für audiovisuelle Bildungsangebote in Bern.

Knowledge

Pixel participated in the second meeting of the European project entitledVR@School – Future schools using the power of virtual and augmented reality, which was held on 30 – 31 May 2019 at Bragança (Portugal). The European project entitled VR@School was funded by the European Commission in the framework of the Erasmus+ Programme, KA2 – Strategic Partnership in the field of School Education. The European project was written and coordinated by Liceul Teoretic de Informatica „Grigore Moisil“ based in Iasi and involves Pixel in the transnational partnership. The VR@School project aims at supporting Virtual Reality as a teaching methodology which helps students feel immersed in an experience, gripping their imagination and stimulating thought in ways not possible with traditional books, pictures or videos, and facilitates a far higher level of knowledge retention. During the second meeting, the European project partners finalized the first output, the Teach@School Online Library collecting Educational Technology and Open Education Resources. In addition, the European project partners discussed the structure of the Teachers Guide on Virtual Reality in school education to be produced in the forthcoming months. More information about the European project entitledVR@School is available at: https://www.vr-school.eu/.

Quelle:

https://europlan.pixel-online.org/news.php?id=464

Laut einer Studie des Digitalverbands Bitkom nimmt das Interesse an Virtual Reality (VR) zu. Die Untersuchung kommt zum Ergebnis, dass knapp jeder dritte Deutsche (32 Prozent) schon einmal eine Virtual-Reality-Brille ausprobiert hat. Das entspricht einem Plus von acht Prozent im Gegensatz zum Vorjahr. Zehn Prozent derjenigen, die bereits eine VR-Brille ausprobiert haben, besitzen ein solches Gerät.

Für die repräsentative Umfrage befragte der Bitkom mehr als 1200 Personen ab 16 Jahren. Bitkom-Experte Dr. Sebastian Klöß resümiert, dass Virtual Reality schon bald im Massenmarkt ankommen wird. Dafür gibt es Gründe: „Mittlerweile bieten zahlreiche Hersteller Virtual-Reality-Brillen an, und für Nutzer gibt es immer mehr passende Apps.“ Ebenfalls gestiegen ist die Zahl potenzieller Anwender: Etwas mehr als ein Fünftel (21 Prozent) kann ich laut Studie vorstellen, künftig per VR-Brille in virtuelle Welten aufzubrechen. Im Jahr 2018 waren es noch 16 Prozent. Doch auch hier gibt es Bundesbürger, die dem ganzen skeptisch gegenüberstehen. So sagen 45 Prozent sagen, dass sie auch in Zukunft keine Virtual-Reality-Brille nutzen werden. Dies liegt jedoch nicht an der Bekanntheit der VR-Brillen, denn immerhin 90 Prozent aller Teilnehmer der Umfrage haben bereits von der Technologie gehört oder gelesen.

VR-Brillen sind in vielen Bereichen einsetzbar

Wer schon eine VR-Brille ausprobiert hat oder gar besitzt, findet viele Anwendungsmöglichkeiten dafür. Sieben von zehn Nutzern (70 Prozent) sind damit in virtuelle Welten abgeraucht und haben Computer- oder Videospiele gespielt. Beinahe die Hälfte (49 Prozent) hat per VR-Brille und Kartendiensten virtuell abgebildete Orte bereist. Etwas mehr als zwei von fünf Nutzern (42 Prozent) haben sich mit dem (noch relativ neuartigen) Gadget Filme angesehen. Für 24 Prozent kam die VR-Brille beim Sport zum Einsatz, etwa auf dem Laufband. Ähnlich viele (23 Prozent) haben sich per VR Wohnungs- oder Häuserplanungen visualisieren lassen. Jeweils 15 Prozent besuchten damit Museen, Messen oder Ausstellungen oder genossen ein Musikkonzert. Und sieben Prozent haben Virtual Reality für Bildungs- und Lernprojekte genutzt.

„Mit jedem Jahr sinken die Einstiegshürden für Virtual Reality: Die Headsets werden leistungsfähiger und es gibt immer mehr ausgereifte VR-Anwendungen. Die Nutzer können so in beliebig viele Lebenswelten eintauchen – das macht die Technik so spannend“, so Klöß.

Hinweis zur Methodik: Für die repräsentative Umfrage wurden 1.224 Personen in Deutschland ab 16 Jahren telefonisch befragt. Die Fragestellungen lauteten: „Haben Sie bereits von Virtual-Reality-Brillen gehört bzw. gelesen?“, „Können Sie sich vorstellen, eine Virtual Reality-Brille zu nutzen?“ und „Für welche Inhalte haben Sie eine Virtual Reality-Brille bereits genutzt?“ (wag)

Quelle:

Foto: franz12 / fotolia.com

Virtual reality offers the unique possibility to experience a virtual representation as our own body. In contrast to previous research that predominantly studied this phenomenon for humanoid avatars, our work focuses on virtual animals. In this paper, we discuss different body tracking approaches to control creatures such as spiders or bats and the respective virtual body ownership effects. Our empirical results demonstrate that virtual body ownership is also applicable for nonhumanoids and can even outperform human-like avatars in certain cases. An additional survey confirms the general interest of people in creating such experiences and allows us to initiate a broad discussion regarding the applicability of animal embodiment for educational and entertainment purposes.

Studie: In VR kann man sich besonders gut als Tier fühlen

Ob man im Körper einer Spinne seine Spinnenangst besiegen kann?

Forscher der Universität Duisburg-Essen haben untersucht, wie gut sich Menschen in den Körper eines VR-Tieres einfühlen können. Immerhin ist die Selbstbefreiung von den eigenen physischen Gegebenheiten durch die Verkörperung digitaler Wesen eines der großen VR-Versprechen. Einfach mal jemand anderes sein – oder etwas anderes.

Insgesamt 26 Probanden versetzten die Forscher in den Körper eines Tigers, einer Spinne oder einer Fledermaus. Mit dem VR-System übertrugen sie die Bewegungen des menschlichen Körpers so genau wie möglich auf das VR-Tier. In einem digitalen Zoo konnten sich die Probanden als Tierkörper im Spiegel betrachten und ihre Bewegungsfähigkeit testen.

Das Ganzkörpertracking ist besonders immersiv, dafür aber auch anstrengender. Bild: Universität Duisburg-Essen

Die Forscher testeten zwei unterschiedliche Eingabeschemata aus der Ich-Perspektive: Die Körper der Testpersonen wurde entweder vollständig aufs VR-Tier übertragen (FB) oder es wurden – für weniger körperlichen Aufwand – nur die Beine von Mensch und Tier synchronisiert. Letztgenannte Eingabemethode ergab nur bei Spinne und Tiger Sinn, nicht bei der Fledermaus.

Das Ergebnis: Die Illusion, in einem fremden Körper zu stecken, funktioniert bei Tierkörpern ähnlich gut wie bei einem menschlichen Avatar. Sogar bei der Spinne, die eine vollkommen andere Skelettstruktur besitzt als der Mensch.

“Unsere Arbeit liefert einen überzeugenden Beweis dafür, dass tierische Avatare mithalten und sogar die humanoide Darstellung übertreffen können”, schreiben die Forscher.

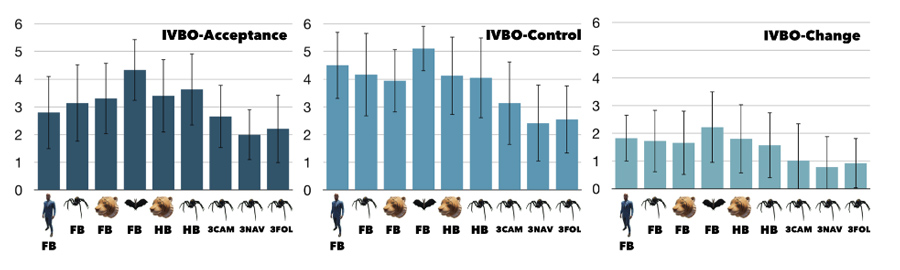

Die Akzeptanz (links) zeigt, wie überzeugend die Körperillusion wirkt. Die Kontrolle (mitte) beschreibt, wie gut er sich steuern lässt. Change beschreibt Veränderungen in der Selbstwarnehmung – der echte Körper in einer neuen Form. Bild: Universität Duisburg-Essen

Die Akzeptanz (links) zeigt, wie überzeugend die Körperillusion wirkt. Die Kontrolle (mitte) beschreibt, wie gut er sich steuern lässt. Change beschreibt Veränderungen in der Selbstwarnehmung – der echte Körper in einer neuen Form. Bild: Universität Duisburg-Essen

In einigen Fällen schlägt der Tieravatar sogar den menschlichen Avatar: In Flugszenarien zum Beispiel ist die Fledermaus vorne. In der Verkörperung von Flugtieren sehen die Forscher daher besonders großes Potenzial für Bildung und Spiele.

Quelle:

https://arxiv.org/abs/1907.05220

Foto: Universität Duisburg-Essen; Andrey Krekhov, Sebastian Cmentowski, Jens Krüger

AR and VR have delivered on the promise to supercharge the enterprise’s education and training industry. From workflow support for sectors like manufacturing where factory floors are made consistently more safe and productive, to teaching employees soft skills that allow them to better adapt to the ever more nuanced demands of the modern workplace, these “embodied” digital formats inherently drive positive results because both our cognition and bodies believe the experience to be real.

Starting in 2016, I began to cover the education niche of VR and primarily through the lens of the remarkable journey traveled by U.K.-based VirtualSpeech; a startup that has never raised a single drop of venture funding and yet (or despite of) has found a viable product-market fit that the team reached through a process of trial and error that has, since last year, positioned them in revenue-positive territory.

VirtualSpeech is based on a hybrid model that pairs VR with traditional course programs like e-learning and in-person training, affording users a chance to practice what they have learnt in realistic environments in order to really absorb and integrate essential soft skills much more effectively.

“User engagement for our courses has also been very encouraging, particularly for the VR part of the courses, where the majority of users complete all their course scenarios. We’ve read about how engagement for online courses is typically low, so we are pleasantly surprised by our engagement levels.” says Dominic Barnard, a cofounder at VirtualSpeech. “As we progress forward, I see the B2B route for us becoming a key avenue for growth, as companies begin to shift budget away from expensive in-person training and into VR training, where users performance can be monitored and ROI accurately measured.”

That most of the success cases in the news about VR and AR education have been fairly isolated to the needs of the enterprise isn’t surprising, thanks to the simple fact that the public education sector is by comparison a bureaucratic jungle of red tape that makes it that much more difficult to penetrate for spatial computing startups that have limited resources and runway.

There is, however, the prospect of reshaping, rejuvenating, and revolutionizing the educational landscape for the next generation of youth. After all, spatial computing triggers our physicality and appeals to our imagination; a unique combination that allows this interactive digital format to help us store learning in an emotional and instinctively charged way that has never been possible before.

Calling all ‘volumetric thinkers’

Last month on The AR Show, host Jason McDowall interviewed Amy Peck, the CEO at EndeavorVR, who spent a good chunk of the podcast discussing the promise of spatial computing for early education, a subject that particularly resonates for Peck since she is the mother of two children she describes as being “diametrically opposed learners.” Her eldest, for example, is a very linear academic learner that does well on tests and therefore tends to excel in traditional academic environments. Her younger son, on the other hand, is what she coins a “volumetric thinker”.

“It’s also the kind of intelligence that sadly land him in the principal’s office quite a bit. Because he’s looking for the whys and wheres, and kind of wants to understand how everything works and doesn’t enjoy sitting in a classroom and being taught reading, writing, and arithmetic.” Peck said during the interview. “What happens when kids are at a fairly young age in middle school is, some of them hit the ceiling in their understanding of math and science. And it becomes very, very, difficult to engage all of the students in the way they learn best.”

The idea is whether emerging tech like spatial computing can help unshackle unconventional thinkers or ones that, for whatever reason, lose interest and therefore focus in traditional Prussian-style education settingslike Peck’s youngest son (or myself, for that matter).

Individualized curriculums

Although it’s impractical to expect a single teacher to be able to service a group of students in an individualized way, we do see it nevertheless happening in the real world at experimental schools like Agora in Roermond, Netherlands. And with the help of AI, immersive education seems fully capable of offering customized education through a digital format as well.

Peck believes that VR represents the opportunity to create systems that takes the pressure off of teachers to have to “teach to the test” or to the specific standards and instead can serve as guides to the curriculum, really letting the child learn in the way that suits them best personally. This would naturally open up a totally new generation of children that don’t get stunted at 13 and give up on STEM education. They, she says, get an extra six or seven years of STEM education.

The push to make this happen is already underway with social enterprises like TechRow, which already brings immersive technology experiences to over twenty K-12 schools throughout New York City. The company leverages immersive technology to build the capacity of schools to support teachers and improve student engagement with the goal of enhancing learning outcomes.

In June, I described how the next generation of mobile connectivity will allow high-fidelity VR and AR to be streamed to the masses in the frictionless manner in which the tech has always been ultimately intended. Indeed, 5G’s potential is mind-blowing insofar as it will allow emerging tech in general to extend their scope of influence by orders of magnitude that, in turn, will allow immersive education to go live and multiplayer at a scale that makes the experiences as persistent and ubiquitous as real life.

Immersive education goes live

Enter Axon Park, the company that is equalizing access to learning by connecting people globally through VR and AI — and live.

“When you use virtual classrooms, you are no longer constrained by physical space. It doesn’t matter where the students or best instructors in the world are based, everyone can just pop on a headset and be together.” says Taylor Freeman, founder and CEO at Axon Park. “It’s definitely a form of teleportation. It’s going to create incredible opportunities for people who live outside the metropolitan areas where high quality instruction isn’t as accessible. I definitely expect to see a true revolution in learning with this XR platform shift.”

The platform is hosting their first ever full semester length college course, which will be taught completely in VR this fall, featuring industry vet, Alex Silkin, co-founder and CTO of Survios, as Axon Park’s first live lecturer. Students join Silkin twice a week in order to learn how to build best-in-class immersive worlds with one of the pioneers that has firsthand helped to shape the industry. And like traditional institutions, admission is highly competitive, designed for expert-level developers, and even offers financial aid for students in need.

“I firmly believe in the potential of VR to revolutionize education. We have already seen how much more engaging education is when we introduce videos and interactive experiences.” says Silkin. “VR takes this to the next level by putting the student right in the center of the action, allowing them to experience the subject matter face to face, rather than through a small square window on a 2D screen.”

That real humans can project as teachers who are accessible at scale is already an awesome enough feat, but the tech naturally opens the door for autonomous avatars to step in as assistants, administrators and, perhaps sooner than we think, immersive guides in their own right. Virtual beings ought to make the very idea of recorded lessons obsolete, and their activity will likely prove to be complementary, rather than overlapping or interfering with, the work of human teachers.

“We will build Axon into an embodied AI capable of teaching a student in a custom and adaptive way, similar to how a human tutor might work with a student. Over time, AI instructors will likely become the primary form of knowledge transfer, whereas human teachers will be much more focused on social and emotional intelligence,” Freeman told me. That’s quite a future.

Quelle:

https://venturebeat-com.cdn.ampproject.org/c/s/venturebeat.com/2019/07/26/the-future-of-immersive-education-will-be-live-social-and-personalized/amp/

Foto: Live VR training with Axon Park

Todd Maddox reports on why A/B tests are sub-optimal in building and optimizing immersive training and performance tools.

I have written extensively on the potential of virtual reality (VR) and augmented reality (AR) to revolutionize training and performance in healthcare, manufacturing, and corporate learning, to name a few. Learning is about the experience, and VR and AR are grounded in experiential learning. VR offers a virtual experience and AR overlays computer-generated assets onto the real world to drive learning and performance.

Learning is about the experience, and VR and AR are grounded in experiential learning

Unfortunately, too often the primary focus is on the technology, with much less focus on optimizing the interface and the experience for the user (the UI/UX). Although computing power, specialized graphics, controllers, and the like are important, their value is diminished if they don’t engage the user’s brain in a way that is effective at achieving the desired training and performance goal. Too often the technological “wow” factor dominates when what is more important is how the user’s brain is engaged to achieve a set goal.

To optimize the UI/UX we must incorporate what is known about the brain and how it processes information, then apply the scientific method to test hypotheses and to identify the optimal solution. For example, one might test the hypothesis that a hands-free AR training solution leads to faster task completion than a hand-held AR training solution. To test this hypothesis, suppose that 10 workers completed the task with the hands-free AR solution, and 10 other workers completed the task with the hand-held AR training solution.

Under the assumption that the only difference between the two groups of workers is the type of training device, the time to completion would be noted for each of the 20 workers and a simple statistical test (called a Student’s t-test) would be conducted to compare the time to completion between the two groups. Although a detailed description of probability theory that underlies statistical reasoning is beyond the scope of this report, suffice it to say that the outcome of this statistical test allows one to claim (or not claim) beyond a “statistical reasonable doubt” that the hands-free AR solution leads to faster time to completion than the hand-held AR solution.

The experiment described above is referred to as an A/B test in the corporate sector. It is the simplest type of experiment that can be conducted, but it is extremely powerful and is used extensively. If you want to determine whether a new drug has the desired effect, you might conduct an A/B tests of the drug against a placebo. If you want to determine if your new technology or product is superior to the existing gold standard, you might conduct an A/B test. An A/B test allows you to test the hypothesis that one group is different from another on some outcome measure, such as time to completion.

I spent 25 years teaching statistics and experimental design to University students, and during that time, I conducted 100s of experiments in my laboratory. I can count on one hand the number of A/B tests that I conducted.

Why so few?

Although there is nothing wrong with an A/B test, factorial designs provide more value at no additional cost. In an A/B test there is one factor. In the example above, the factor would be the type of AR training device (hands-free vs. hand-held). Suppose a second factor was included that represented time pressure. Specifically, suppose that 5 of the workers using the hands-free device and 5 of the workers using the hand-held device were simply told to complete the task, whereas the other 5 from each group were told that they must complete the task within 15 minutes when it normally takes about 25 minutes. This factorial design has four groups of workers: hands-free/no time pressure, hands-free/time pressure, hand-held/no time pressure, and hand-held/time pressure. More importantly, it allows one to test three hypotheses.

- Hypothesis 1: Does a hands-free device speed time to completion?

- Hypothesis 2: Does time pressure speed or slow time to completion?

- Hypothesis 3: Does the presence or absence of time pressure affect time to completion differently for hands-free vs. hand-held AR training tool?

In the simple A/B test example, 20 workers completed the task, and Hypothesis 1 was tested. In the factorial design example, 20 workers completed the task and all three hypotheses could be tested. This is the power of a factorial design over an A/B test, and this is one reason why the overwhelming majority of experimental science, especially behavioral science, relies exclusively on factorial designs. A second reason follows from Hypothesis 3.

Hypothesis 3 is especially interesting and addresses the possibility that there is an “interaction” between time pressure and the nature of the device on time to completion. I would predict that a hands-free device is much less susceptible to the deleterious effects of time pressure than a hand-held device. Of course, this is an empirical question.

Interactions abound in the real world, especially the world of behavioral science. We have all heard of drug interactions. For example, some research suggests that a glass or two of wine can reduce stress, and a small dose of Xanax can reduce anxiety, but combining alcohol and Xanax can be fatal. That is a drug interaction. The combined effect of alcohol and Xanax is not simply the sum of the independent effects. Rather the effect is interactive, large, and potentially fatal.

Interactions abound in the real world, especially in behavioral science

Anytime a human is involved and is physically processing something like a drug, or is mentally processing and engaging with technology, interactions are likely. This is the second reason why factorial designs are so popular. You will miss important findings if you focus exclusively on A/B tests.

The human brain is composed of multiple learning and performance systems. These include the cognitive learning and performance system in the brain that relies on the prefrontal cortex and is constrained by working memory and attentional limitation. The behavioral skills learning and performance system in the brain relies on the striatum and learns stimulus-response associations via incremental, dopamine-mediated reward and punishment learning. Interestingly, this system is not constrained by working memory and attention. In fact, “overthinking” hurts behavioral learning.

The emotional learning and performance system in the brain relies on the amygdala and other limbic structures. This addresses issues of stress, pressure, and situational awareness broadly speaking. Finally, the experiential learning and performance system in the brain represents the sensory and perceptual aspects of the environment and relies on the occipital, temporal, and parietal lobes.

Anytime a human is mentally processing and engaging with technology, interactions are likely

Each system has its own unique processing characteristics, and thus each system is optimized with different technologies and processes. For example, the critical bottleneck within the cognitive system is cognitive load. One almost always wants to reduce cognitive load. This means that the what, where, and when of VR or AR assets must be examined to determine the optimal combination to achieve a given goal. The best way to do this is with a factorial design.

The what, where, and when are each a separate factor that must be combined and studied factorially to optimize performance. The nature and timing of corrective feedback in the behavioral system must be examined with a factorial design, and of course the emotional and motivational aspects (stress, pressure, engagement) as well as the detailed sensory and perceptual processes must be examined.

You will miss important findings if you focus exclusively on A/B tests

The basic science literature is full of fascinating results in each of these domains that can guide VR and AR product optimization, but in the end, one has to rely on science and factorial designs to “get it right”. The plethora of data that can be extracted from VR and AR solutions and the ease of manipulating relevant factors (what, where and when of assets or the nature of timing of the feedback) makes factorial designs straightforward to conduct.

VR and AR training and performance tools have already provided significant ROI relative to traditional training approaches. If you have a basic understanding of the breadth of neural engagement with these technologies, then you are not surprised by this ROI. Even so, these initial ROI benchmarks are a starting point. Much more ROI is awaiting those organizations who are ready to conduct the factorial design experiments necessary to find the “sweet spots” in the UI/UX, and there are many, that will show the true value of these technologies.

These immersive training and performance technologies are still in the early stage. They are reaping benefits for their users because of the way that they engage the brain relative to traditional approaches. As with any young science or technology, the early wins are often large, but more wins are to follow if solid research and development is conducted that leverages what we know about the brain, applies the scientific method, and uses factorial designs as a window onto the interactions that optimize UI/UX.

Quelle:

http://www.virtualrealitypulse.com/edition/weekly-oculus-htc-2019-07-13?open-article-id=10944704&article-title=building-better-xr-training&blog-domain=techtrends.tech&blog-title=tech-trends-vr

Die Anwendungsbereiche für Virtual Reality (VR) sind fast unbegrenzt, solange es jemanden gibt, der bereit und in der Lage ist, die Inhalte zu erstellen. Derzeit finden VR-Spiele und -Filme die größte Beachtung, doch es treten zunehmend Schulungsprogramme auf, die diese Technologie nutzen.

Schulungen standen vielleicht nicht ganz oben auf der Liste von Facebooks (WKN:A1JWVX) Oculus- oder HTCs (WKN:A0RGRD) Vive-Entwicklern, als sie die Headsets designt haben. Doch in diese Richtung entwickelt sich der Markt, und diesen Bereich sollte jeder in der VR ernster nehmen.

Die großen Einzelhändler setzen auf VR

Im Jahr 2018 führte Walmart (WKN:860853) das VR-Headset Oculus Go ein, um damit die Mitarbeiter vor der stressigen Weihnachtszeit zu schulen. Die Angestellten wurden in “neuer Technologie, Soft Skills wie Empathie und Kundenservice sowie Compliance” geschult.

Neue Technologien, die von Walmart eingesetzt werden, wie der Pickup Tower, wurden sogar mit Hilfe von VR-Schulungen in Betrieb genommen, bevor die Geräte in den Laden kamen. Die Schulung kann Fehler durch neue Technologien reduzieren und die Einführungszeit neuer Produkte und Dienstleistungen verkürzen, die beide eine positive Rendite haben.

Alltagsszenarien zum Leben erwecken

VR muss sich aber nicht immer um den Einzelhandel drehen. Der Body-Kamerahersteller Axon (WKN:A2DPZU) gab kürzlich bekannt, dass die Chicagoer Polizei ein Empathie-Trainingsszenario in VR als Teil ihres Trainingsplans für Kriseninterventionen einsetzt. Die Schulung durchläuft Szenarien mit Menschen mit “psychischen Problemen, Krisen oder psychotischen Episoden”, und soll den Polizisten eine neue Sichtweise auf Situationen geben, in die sie geraten könnten.

Polizeibeamte durch ein realitätsnahes Szenario zu führen, kann wirkungsvoller als andere Lehrmethoden sein, und ist jetzt mit autonomen Headsets wie dem Oculus Go und Quest skalierbar.

Sporttraining auf die nächste Stufe heben

Ein Bereich, in dem das VR-Training recht schnell umgesetzt wurde, ist der Sport. Die Verwendung von VR als Trainingsgerät kann Athleten ermöglichen, etwas unbegrenzt oft zu üben, ohne sich dabei körperlich so viel anzustrengen. Während der Saison 2017 verbuchte der NFL-Quarterback Case Keenum 2.500 VR-Übungswiederholungen, als er Ersatz war. Dann führte er die Vikings zu einem 11:3, als er die Führung als Starter übernahm.

Es gibt auch VR-Simulatoren für Torwarte und das Stockhandling im Eishockey, Schlag-Simulatoren im Baseball und sogar Ski-Simulationen. Virtual-Reality-Training kann in fast jeder Sportart einen Mehrwert schaffen, so die Befürworter, und reduziert das Risiko von Verletzungen beim Training erheblich.

Das Training beeinflusst die Dynamik der VR-Branche

Da weltweit Millionen von Menschen verschiedene Trainingsprogramme in VR absolvieren und Zehntausende von Headsets an Unternehmen verkauft werden, die Schulungen durchführen, ist es denkbar, dass mehr Menschen VR zu Trainingszwecken als zum Spielen eingesetzt haben. Das ist deshalb wichtig, da sich die von Unternehmen entwickelten Plattformen für unterschiedliche Anwendungsbereiche eignen.

Die Go- und Quest-Headsets von Oculus sind für Trainingszwecke beliebt, aber sie sind in erster Linie für Verbraucher konzipiert. Sie sind kostengünstiger und weniger leistungsstark als Konkurrenzgeräte von HTC, und die Oculus-Vertriebsplattform ist für den Verkauf von Spielen und anderen Verbraucherinhalten konzipiert.

HTC hat eine offenere Plattform entwickelt, die speziell auf Unternehmen zugeschnitten ist. Es ist einfacher, benutzerdefinierte Inhalte auf den Geräten auszuführen, und die Headsets sind hochwertiger als die von Oculus (und teurer). Der Nachteil ist, dass Oculus führend bei eigenständigen Headsets ist, die für die Ausbildung attraktiv sind, da viele Personen gleichzeitig in VR einbezogen werden können.

Wenn neue Geräte- und Plattform-Upgrades auf den Markt kommen, sollte man nach Unternehmensschulungen Ausschau halten, denn dieses Segment versuchen immer mehr Headsethersteller zu erobern. Es ist ein äußerst wertvoller Teil des VR-Ökosystems und kann weit vor den VR-Anwendungsbereichen von Endverbrauchern an Bedeutung gewinnen.

Systematisch Vermögen aufbauen mit System – leichter als du denkst!

In nur 9 Schritten ein Vermögen aufbauen und reich in den Ruhestand gehen? Das ist machbar! Die Fools haben jetzt eine Schritt-für-Schritt Anleitung erstellt, wie du ein Vermögen an der Börse aufbauen kannst. Geeignet auch für Börsenanfänger! Klicke hier und fordere ein Gratis Exemplar dieses neuen Berichts Wie du 1 Millionen an der Börse machst jetzt hier an.

Randi Zuckerberg, ehemalige Direktorin für Marktentwicklung und Sprecherin von Facebook und Schwester von dessen CEO Mark Zuckerberg, ist Mitglied des Vorstands von The Motley Fool.

Dieser Artikel wurde von Travis Hoium auf Englisch verfasst und am 12.07.2019 auf Fool.comveröffentlicht. Er wurde übersetzt, damit unsere deutschen Leser an der Diskussion teilnehmen können.

The Motley Fool besitzt und empfiehlt Axon Enterprise und Facebook.

Motley Fool Deutschland 2019

Quelle:

www.fool.de

https://boerse-express.com/news/articles/virtual-reality-schulungen-werden-immer-beliebter-130430

Foto© www.fool.de

Virtuleap is for sure one of the most promising startups I have talked with in this 2019. I had the pleasure of talking with the CEO Amir Bozorgzadeh some weeks ago (sorry for the delay in publishing the article, Amir!) and I was astonished by his ambitious project, that is understanding the emotions of the user while he/she is just using a commercial headset. This means that you may just wear your plain Oculus Go, without using any additional sensor, and the Virtuleap software may be able to “read your mind”.

I can read in your mind that you are intrigued, so keep reading…

How Virtuleap has born

Amir Bozorgzadeh told me that he comes from a background in market research and mobile gaming.Thanks to an accelerator program he attended in 2018, he got in contact with virtual reality and remained fascinated by it. At the same time, he started writing technical articles for big magazines like VentureBeat and TechCrunch as a freelancer (this may be the reason why you have surely already read his name).

Due to his background, he started thinking about performing analytics and market analysis in virtual reality. He got quite bored by the related solutions of the moment, since as he states, companies were just “copying-and-pasting Google Analytics into virtual reality”, while he thought that virtual reality had much more potential for the analytics sector. He thought that copying traditional tools to VR was wrong, and so he asked himself “What is the new type of data that can make analytics and consumer insights interesting in AR and VR?” and founded Virtuleap to answer this question.

Analysis of emotions in VR

AR and VR are actually great technologies to study the behavior of the users. Virtual Reality is able to offer the emulation of reality and this is great to study the behavior of the users in real situations. If you apply devices like eye tracking, skin conductance sensors, heart rate sensors or even EEG/EMG to the VR experience, it is possible to detect important information about the psychological state of the user that is living in VR.

This is of fundamental importance for psychologists, for instance, to be able to detect and cure anxious states in patients. Measuring the stress levels during the VR experiences may be useful for a psychologist to detect if the arachnophobia in a patient is still present by showing him a virtual spider, for instance. Or can be used for training to see if the trainee can adapt well to stressful situations. Or in marketing to see if the user is interested in the product he/she’s being showcased.

As you can see, the applications are really many, but there is a problem: to have reliable results, the user must have complicated setups featuring all or some of the above cited devices. And they are expensive and cumbersome… no consumers want actually to wear all of them.

Virtuleap and the analysis of psychosomatic insights

Amir noticed that all the consumer VR headset have almost no one of the above sensor embedded inside: this means that creators have no access to biometric data. So he asked himself “How can I offer biometric analytic data on the bottom layer of mainstream AR/VR devices? How can I offer biometric data on a cheap device like Oculus Go?”.

Thanks to his passion for psychology, he started so studying what he calls “psychosomatic insights”, that is what can be inferred of the status of the user from body language alone. We have years of scientifical studies on animals and human beings that relate bio-language to emotional insights and so he and the other Virtuleap founders started working on porting all this knowledge from the research environment to a viable business product.

Oculus Go is really a simple 3 DOF VR device, but actually, it can give us 16 channels of body capture. To be exact, this is the data it can provide:

- Headset Data

- Angular Acceleration

- Accelerometry

- Angular Velocity

- Rotation

- Camera Rotation

- Node Pose Position

- Node Pose Velocity

- Node Pose Orientation

- Line of Sight

- Hand Controller Data

- Rotation

- Local Rotation

- Local Position

- Local Velocity

- Local Acceleration

- Local Angular Acceleration

- Local Angular Velocity

- Camera Controller Rotation

All these data, got from the sensors installed on the Go and its controller, may be used to track the nervous system of the user. By tracking all the movements of the users, including all his/her subliminal movements, like micro-gestures and micro-motions that he/she is not even aware about, it could be possible to infer something about his/her emotional state.

Using neuroscience research, it may be so possible to just let a user play with a plain Oculus Go and infer if he’s angry, bored, stressed or interested during all the stages of the game. This is massive. Collecting all this data over time while you perform some specific VR tasks, it is also possible to profile your brain, and so understand how you handle stress, how you are attentive, how you are good in problem–solving and so on.

The idea of Virtuleap is to offer a whole framework for emotional detection and analysis for AR and VR. In the long run, the framework will adapt on the sensors with which the XR system will be equipped: so if the user will use a plain Oculus Go, the system will just analyze the micro-gestures defined above; if there will be eye tracking on the VR headset, then the system will use the micro-gestures + eye data; if there will be a brainwave reading device, it will be used as well, and so on. The more sensors will be used, the more the detection will be reliable. But the system should work with all the most common commercial XR headsets, so that the analysis can run with consumers and not only in selected enterprise environments.

The company also plans adapting the algorithms to the kind of application that the user is running: detecting stress levels in a horror game is different than detecting it in a creative application, for instance, because different brain areas are involved or the same brain areas are involved, but with different activity levels. So, the company will tailor the detection for various different scenarios, so that to offer better emotion analysis in the experiences.

Current status of the project

The plan of Virtuleap is incredibly ambitious and I think that if Amir will actually be able to realize it, his startup has the potential of becoming incredibly successful and profitable.

But the road in front of it is still long and complicated. Currently, the theory that is possible to go from micro-movements data to a reliable detection of all the emotion of the user has still to be proven. Also because current commercial devices like Oculus Go supply noisy data for the analysis. The company has understood how to clean the data it has to analyze and what mathematical models are most promising, but it has actually just started going out from research mode to enter the production mode. So, it is just at the beginning and nothing has been properly validated yet.

What it can do now is detecting “arousal” states in the users. And no, we are not talking about THAT KIND of “arousal” (you pervert!), but of a triggered state of the brain, a spike in emotional activity of any kind. So, Virtuleap is currently able to detect when you’re getting emotional for some reasons while you’re using VR. Butit is currently not able to detect why you’re getting this spike: maybe you’re scared, you’re overly happy, you’re stressed, or you’re aroused in-that-sense. I have to say that in my opinion is already something exciting (my arousal level has risen when I heard this!), but of course, it is not enough.

That’s why the company is currently looking for partners in different sectors so that to gather more data that will make the machine learning system more reliable and able to distinguish the different emotions. These partners are studied so that the “arousal detection” can be already useful for them because the experience has already a defined context. For instance, if the user is playing a horror game and there is a detection of a spike in arousal, it is almost sure that it has happened because the user is overly scared… it is not necessary to understand the emotion, because it is obvious. Currently, Virtuleap is partnering with companies in sports streaming, e-commerce, security training, and many others.

Neuroscientists know how to correlate behaviors to parts of the brain that get activated. So mixing the data of what the user is supposed to be doing with the one detected from the headset, it is easier to understand what is happening in the mind of the user.

The plan is gathering data, train the machine learning system and then validate the results using the ones from other devices like eye tracking and EEG.

The startup has an internal test app called The Attention Lab. You can enter this virtual lab environment and play little games inside it. One game that there is now, for example, shows you a pirate ship that attacks you and you have to fight it back by launching bombs at it with precise timing. This game wants to analyze how you do cope with stress: how easy you do get stressed, how do you behave in stressful situations, etc…

After that you have played the games, you can return to the virtual laboratory and see the data about your brain that have been collected. You can look at the profile of your brain, and understand better how is your personality. You can also share it with other people and challenge your friends on who is better at stress management, for instance.

At the moment, the app is for internal use, but the company is planning to make it public in the future, also to be able to gather data from more users and train its ML models better. I hope I will be able to try it soon,because I’m curious to experiment with this black magic of reading my emotions without using sensors!

During fall 2019, Virtuleap plans to already make available in beta the API, reporting and dashboard systems. So, interested companies will already be able to experiment with emotional analysis in VR. The prices won’t be high, says Amir, and mostly are needed to cover the costs of the cloud.

But when I asked Amir how much time is needed for the framework to become fully complete, including all the possible sensors and giving fully reliable data, he told me that probably 3 years is a probable timeframe.So, the road in front of this startup is still very long.

Recent pivot to training

The various characteristics that Virtuleap aims at helping you in developing (Image by Virtuleap)

The various characteristics that Virtuleap aims at helping you in developing (Image by Virtuleap)

Before publishing this article, I have got in touch with Amir again and he gave me a little scoop exclusive to this website (yay!). He told me that actually now Virtuleap is experimenting a pivot to brain training technology. That is, using all the expertise that the team has gathered in neuroscience not only analyze the brain, but also to create VR experiences that can train the brain in developing new characteristics. For instance, you can train yourself to cope better with stress, to orient yourself in new environments and so on. This is very intriguing… and VR has already proved to be very effective to train and educate the users. It has a strong influence on the brain.

The Attention Lab should serve this purpose as well, and it will be released in beta in September of this year.

Emotional analysis and privacy

When talking about the collection of emotional data, there is the obvious concern of privacy. Amir told me that his purpose is helping people that have psychological or neurological problems, but he agrees that the technology may be misused by marketers and Zuckerbergs of all kinds.

“With BCI, privacy doesn’t exist. The computer is you”. I think that this quote of his summarizes it all how BCIs have great potential but also create great risks for our lives.

In his opinion, to mitigate this issue, first of all, all gathered data should be anonymized by default. Then, every user should be informed with a clear prompt (not long documents in legalese) on what data will be gathered and from which sensors, letting him/her choose what could be harvested from his/her brain. This should help, even if the industry is going forward too fast and privacy protection is a bit lagging behind.

A final word for VR entrepreneurs

I could really feel the passion of Amir for AR and VR. I asked him a piece of advice for all people wanting to enter the industry. He told me that he doesn’t like cowboys, people that entered the field just to make money when there was the hype.

He also doesn’t like who is in the tech only in part. “You can’t stay with a hand attached to a safe branch and the other hand to AR/VR”. He advises people that if they want to enter this field, they have to burn the bridges behind and fully commit to immersive realities. This is what the industry needs and this is the only way to gather his respect.

He also added that in his opinion all this focus on VR and AR for gaming is wrong: companies should invest more money in enterprise products, in useful solutions, in standards, and in WebVR technology… that is, in making the technology go forward. Gaming is just a little part of VR, he added.

Quelle:

Virtuleap wants to read (and train) your mind while you play in VR

Podcast rund um das Thema Lernen und Arbeiten mit und in Virtual-/Augmented (VR/AR) und Mixed Reality (MR).

- Einsatzfelder von VR/AR in der Versicherungswirtschaft – von Onboarding-Prozessen bis zum Kundenerlebnis

- Termine sind Online für Ausbildung zum Digital Reality Trainer, Digital Reality Learning Architect

- Besuch Industriekonzern – Diskussionen Digitaler Zwilling mit VR/AR

- Anmelden Learnext (1 Tag Kongress / 1 Tag BarCamp)

- Whitepaper „Tipps gegen verschwommene Virtual Reality (VR)“

- Immersive Learning Community eintragen und mitmachen

- Roundtable „Infomappe“ abrufen

Folge 023 Immersive Learning Podcast anhören

Die aktuelle und die restlichen Podcast-Folgen hier oder abonnieren auf:

|

|

|

As VR applications and cinematic experiences grow in popularity, so comes a requirement to design, create and mix the actual audio for these virtual spaces.

Technically speaking, VR audio is binaural, in other words a different audio signal is fed to each ear in order to create the perception of a three-dimensional sound field. In many senses, the stereophonic sound is binaural, but the words aren’t synonymous: stereo audio can’t recreate a complete and natural three-dimensional sound field, whereas that’s what binaural audio is all about. Stereo works very well, obviously, for creating left-right positioning and – if you know the tricks – can create the impression of near-far as well.

When coupled with conventional visual media, this works just fine, because the action on a two-dimensional screen maps naturally to a stereo sound field. Surround sound creates a more immersive aural experience than stereo, but just like stereo it is locked to a fixed, two-dimensional point of view, and just like stereo, it has no way of capturing or simulating the up-down position of a sound source.

What’s needed for cinematic VR, then, is a method of capturing panoramic three-dimensional sound, and a way of mixing and reproducing that sound independently of the listening position and speaker configuration – surprisingly, the technology for doing this, Ambisonics, has been around since the 1970s.

Ambisonics is a full-sphere surround-sound format, and works on much the same principles as mid-side stereo. With mid-side, the mid channel carries a collective signal of the sound at the listening position, while the side channel carries left-right positional offset information; in an Ambisonics system, three side channels are employed to capture positional information in all three dimensions (‘higher order’ Ambisonics use more side channels for greater positional accuracy).

And because Ambisonics captures a full-sphere sound field, it’s possible for the apparent listening position within this field to be modified by, in effect, rotating the sphere – just what’s needed for VR! Similarly, when mixing for VR, Ambisonics panners allow you to position non-Ambisonic parts within the 3D sound field, in much the same way as a stereo panner lets you set the left-right position of a mono part within a stereo sound field.

Ambisonic pathways

There are actually quite a few Ambisonic microphones on the market now, and custom-built rigs of omni- and bi-directional mics are also common. Good examples of these include the Sennheiser Ambeo, NT-SF1 (and Soundfield app) by RØDE, Core Sound’s TetraMic and the Zoom H3-VR. Most mainstream DAWs support Ambisonics either natively (Pro Tools, Nuendo and Cubase, for example) or via plug-ins such as the Waves B360 Ambisonics Encoder. This support allows you to create a multi-channel Ambisonics mix while monitoring via stereo headphones and allows the listening direction to be modified, either by an HMD or via onscreen controls.

There are also tools for syncing your DAW with a VR video player, although this is a clumsy way to work because you can’t see both your DAW’s controls and the scene you’re working on at the same time, and when viewing the VR scene, there’s no guarantee you’ll even be facing your workstation. Solutions will come, but for now, this clumsiness is par for the course.

Once you’ve got DAW and HMD playing nicely together, you then have to face the biggest challenge of VR audio: nothing you know is relevant any more! Reverbs don’t really work in this all-encompassing context, and create a confusing and unrealistic aural environment; positioning point-source sounds, such as a voice or car horn is easy enough, but convincingly simulating their reflections and echoes within the virtual environment is not; positioning wider, diffuse sounds, like ambience and foley, and making them move realistically with the point of view, is also difficult but not impossible. For example, the Ambisonics mode of Steinberg’s MultiPanner has a number of tools for controlling the three-dimensional position, source size and field size of a sound.

However, capturing natural sound in a real space using a full-sphere mic will always produce a more convincing result than trying to simulate sound effects, ambience and Foley with samples and reverb; in the absence of an Ambisonic mic, a mid-side pair will capture a sound that works reasonably well within an Ambisonic system.

As with most things, you can find plenty of discussions on the web about VR audio, and these are certainly useful in helping you set off on the right course. But if you’re planning on moving more seriously into VR audio production then the best thing to do is experiment, fail, and then experiment some more! Every innovation in technology has been essentially founded on that principle. With VR technology accelerating at the rate it is, it makes sense to arm yourself with the technical knowledge that could potentially become the norm for audio production in years to come.

Quelle:

https://vrroom.buzz/vr-news/tech/recording-and-mixing-audio-virtual-reality

Foto: Ambisonic mixing requires a different understanding of how (and where) your audience will hear the various elements of your track.